Metadata in data teams’ daily workflows, a masterclass with data leaders, and more 🔥

✨ Spotlight: How can metadata take context where data users are and when they need it?

Welcome to this week's edition of the ✨ Metadata Weekly ✨ newsletter.

Every week I bring you my recommended reads and share my (meta?) thoughts on everything metadata! ✨ If you’re new here, subscribe to the newsletter and get the latest from the world of metadata and the modern data stack.

✨ Spotlight: Bringing metadata into data teams’ daily workflows

For example, when you’re in a BI tool like Looker and wonder “Can I trust this dashboard?” or “What does this metric mean?”, the LAST thing you want to do is open up another tool (aka the traditional data catalog), search for the dashboard, and browse through metadata to answer that question. Data practitioners today want context where they are and when they need it.

For example, Data Mesh talks about this concept of federated computational governance or a system that uses feedback loops and bottom-up input from across the organization to naturally federate and govern data products. Metadata is what makes this possible.

With usage metadata about what assets people actually use, it’s possible to create a product health score about how much each data product is used and updated. The products could then be sorted by health score and matched to their data product owners. For great products, the product owner could get a Slack message: “Congratulations! Your data products are doing great.” Low-quality or outdated products could be automatically removed or deprecated, with a ticket added on Jira for the relevant owner.

Metadata from lots of different places is the key to automating and orchestrating fundamental concepts behind the data mesh like democratization, discoverability, trust, security, and accessibility. But how do you bring this metadata back into the daily workflows of teams? How do you use all this context to improve all the other tools in the data stack?

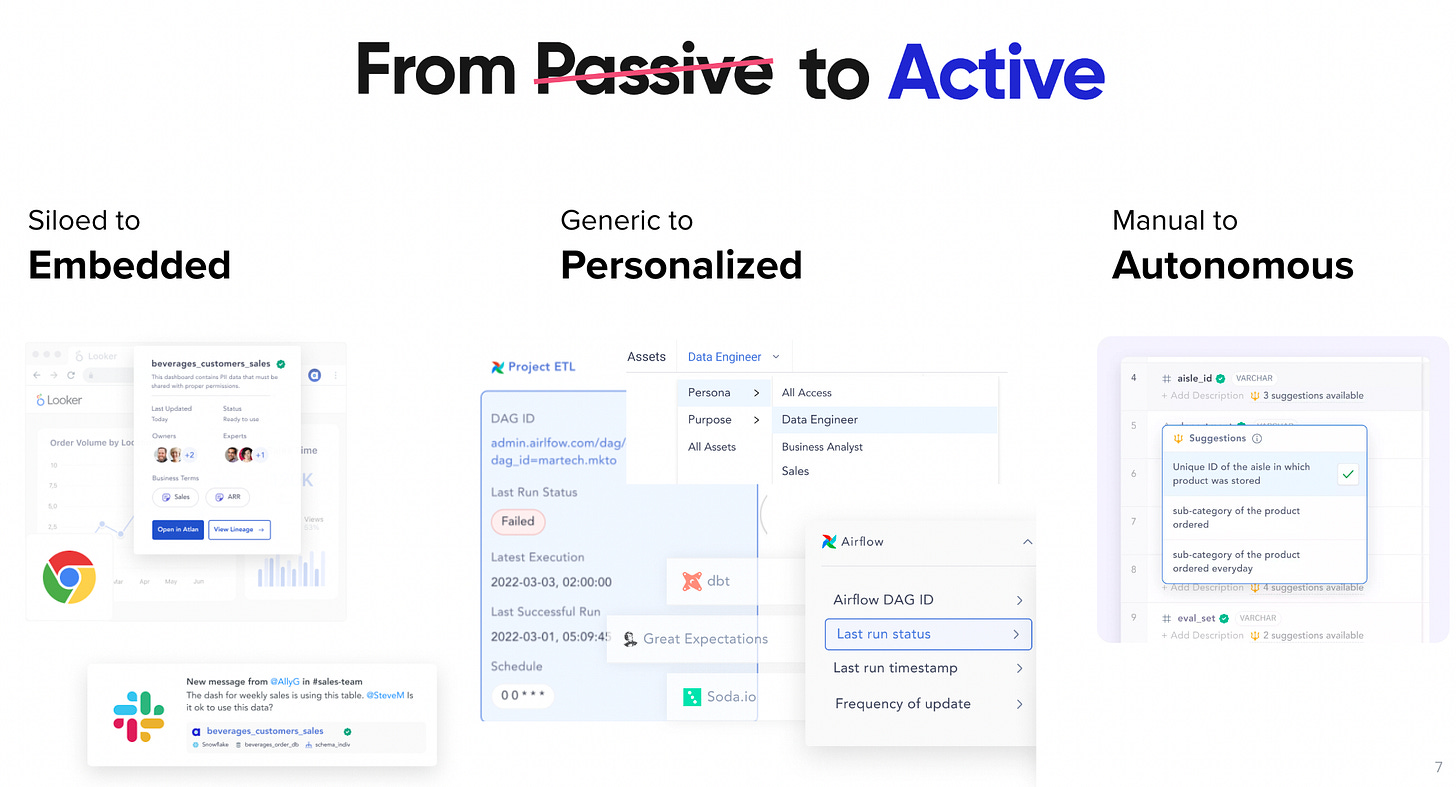

This is where I believe active metadata comes in. Instead of locking up metadata into a silo, active metadata transforms the way we get context about data.

From generic to personalized: Data teams are diverse. Analysts, engineers, scientists, and architects all have their own preferences. Context means different things to different personas — data engineers care about pipeline health, and data quality tests whereas analysts care about column descriptions and frequency distribution. If Netflix can serve personalized experiences to you and me, why serve the same generic experience to all the humans of data?

From manual to autonomous: Metadata management has traditionally been pretty… manual. Whether it’s manual quality checks for important reports or updates to GPDR classifications, intelligent bots can help reduce routine, manual data work with automated workflows. But simply touting AI/ ML as the solution to everything is… well… just marketing. Every company, industry, and context is different, and a single ML algorithm won’t solve all your data management problems. This is where an open framework to build automation and bots (think RPA) can really help the industry turbocharge metadata automation.

From siloed to embedded: Imagine a world where data catalogs don’t live in their own “third website”. Instead, a user can get all the context where they need it — either in the BI tool of their choice or whatever tool they’re already in, whether that’s Slack, Jira, the query editor, or the data warehouse.

I talk about leveling up your data platform with active metadata in detail in my recent chat with Tobias Macey for the Data Engineering Podcast. You can learn more here.

📚 From my reading list

Reflecting on DataAISummit2022 by Neelesh Salian

Data teams are getting larger, faster by Mikkel Dengsøe

Why do people want to be analytics engineers? by Benn Stancil

My story and why I write About data by Madison Mae

What Great Data Analysts Do — and Why Every Organization Needs Them by Cassie Kozyrkov

I’ve also added some more resources to my data stack reading list. If you haven’t checked out the list yet, you can find and bookmark it here.

💙 Special Invite for Metadata Weekly Readers

This month we’re hosting our first masterclass for the data community and I’d love Metadata Weekly readers to join us. This is a virtual session so you can sign up and join from wherever you are. 🙂

🤓 Masterclass with Data Leaders from WeWork

When WeWork’s IPO failed and COVID had impacted the business to divest and cut costs, their data team was set to solve two big challenges – 1.) Rebuild trust (internally and externally) 2.) Build the right tech stack

Next week, I am hosting Harel Shein, Head of Data Engineering at WeWork and Emily Lazio, Data Product Architect at WeWork – both of them have been through it all and are now joining for this masterclass to share all that they have learned.

Join us to learn how WeWork’s 15-member data team with 1,500 dependent data users rebuilt a culture of trust amidst many ups and downs. In this masterclass, you will learn WeWork’s approach towards:

👉 Adopting a modern data stack with Snowflake, dbt, and Atlan

👉 Digging deep into user pains through a data product thinking mindset

👉 Finding the right metadata platform through an in-depth evaluation process

👉 Agile-based approach to implementation, including creating column-level lineage

👉 Building a data community through DataOps enablement programs like WeWork University

👉 Structuring the team behind it all: Building a DataOps function

You can save your spot for the masterclass by signing up on this link.

P.S. Have been enjoying the content from Metadata Weekly? It would mean the world to me if you could take a moment and give a shout-out to this special edition on social. 💙

See you next week!